Impact of Studio versus Field Imagery on CNN Performance for Mollusc Shell Analysis

Published on: 28 May 2025

Introduction

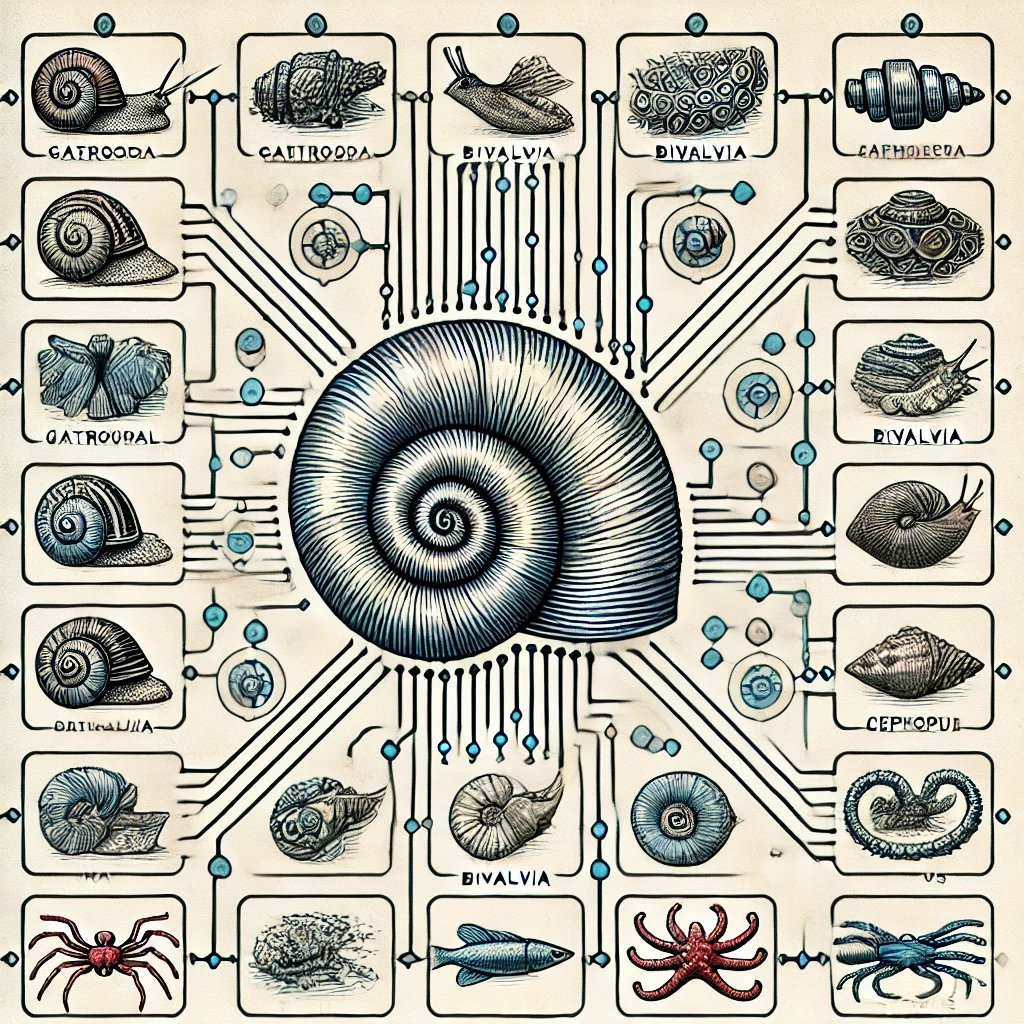

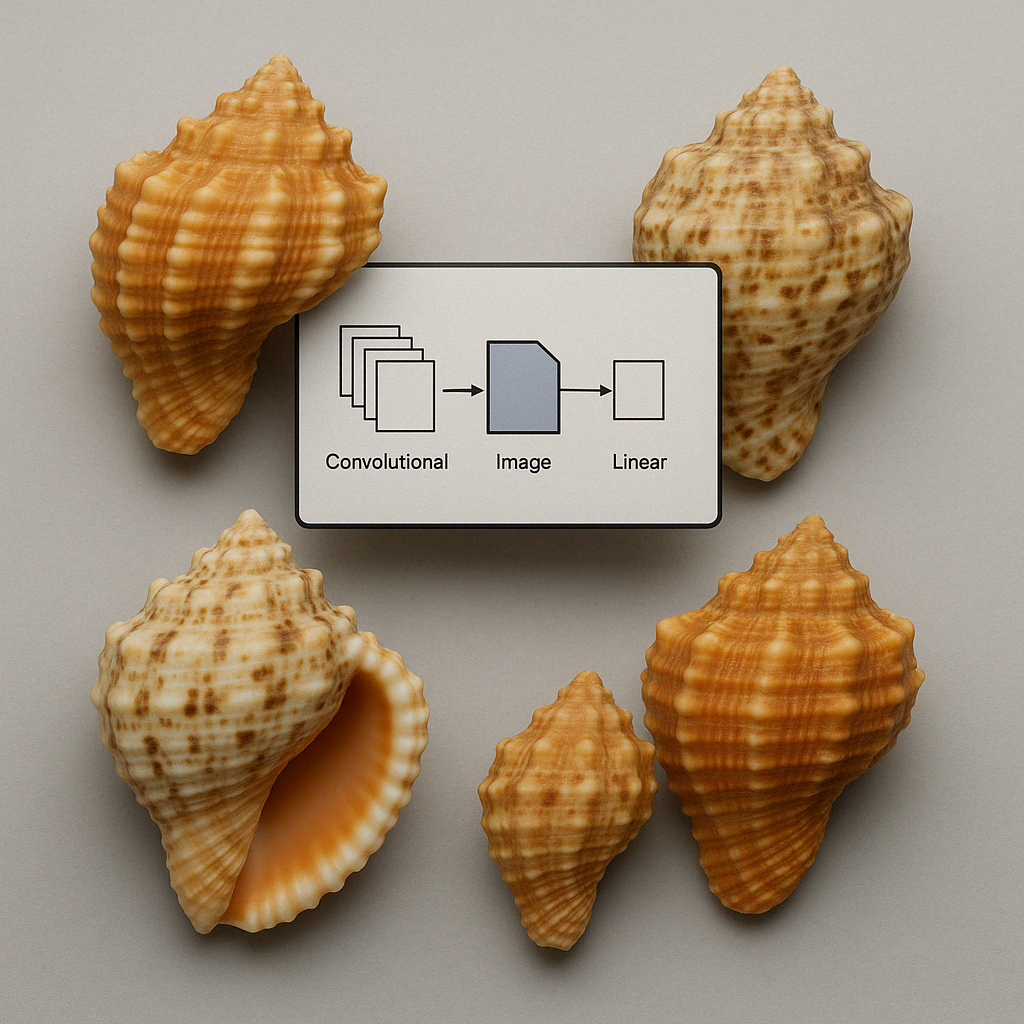

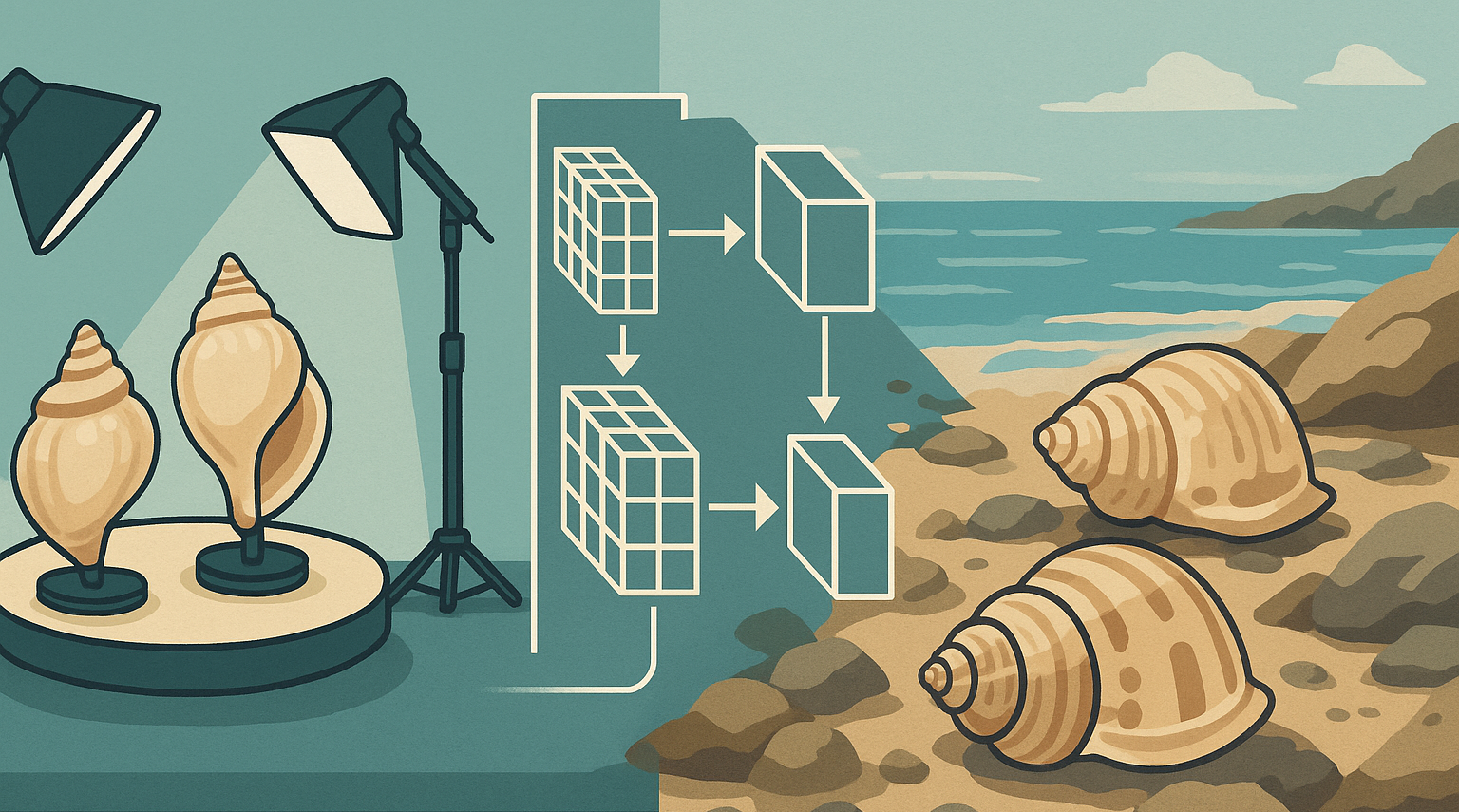

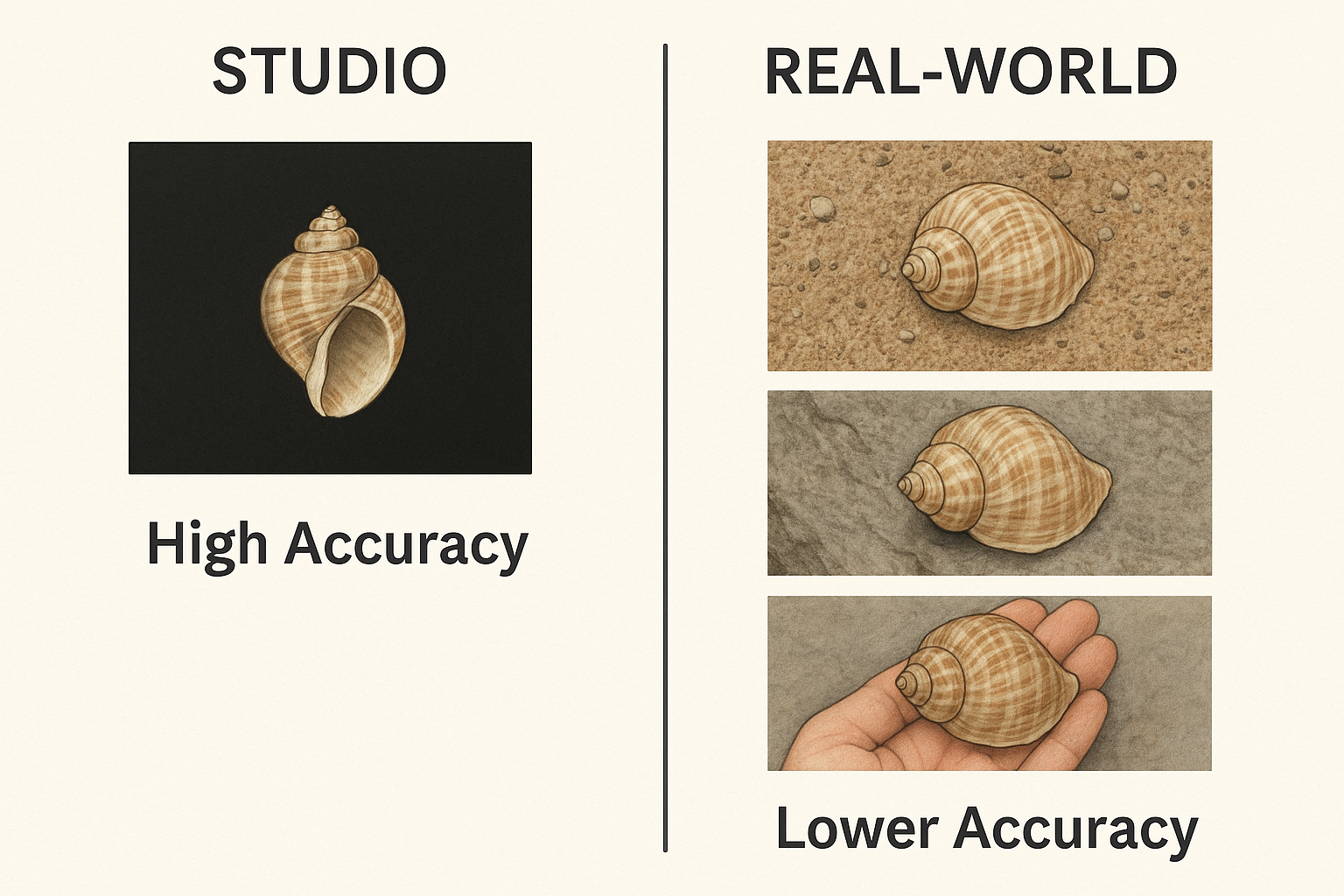

Convolutional Neural Networks (CNNs) have become increasingly integral to image-based biological research, offering powerful tools for tasks such as species identification and morphological analysis. Their efficacy stems from the inherent ability to autonomously learn hierarchical features directly from image data. A critical consideration when developing these models is the image domain used for training: studio (controlled) images versus field (natural) images [1, 2]. Studio images are typically taken under controlled conditions (uniform background, consistent lighting, shell centered and unobstructed), whereas field images depict shells in natural, often cluttered environments (e.g. on sand, among rocks, under variable lighting). This choice of training domain can significantly affect model performance and the features that CNNs learn, influencing whether the network focuses on intrinsic shell characteristics or extraneous environmental cues.

Figure 1: Example of photographs on Conus ventricosus, a conus shell from the Mediterranean Sea. Showing a studio picture on the left with an intact shell on a black background, to pictures that might confuse a CNN, going from the background (2nd from the left), to eroded shells on a white tissue. The right picture shows a field image of an eroded shell where the background takes a large proportion of the total image.

Depending on the use case, we discuss how studio vs. field imagery impacts CNN-based mollusc shell analysis across multiple use cases:

- Morphological research (e.g. studying species variability or shell shape patterns)

- Species classification (identifying mollusc species from images)

- In-situ detection and classification (finding and recognizing shells in natural, noisy backgrounds)

CNN Feature Learning: Influence of Image Acquisition Conditions

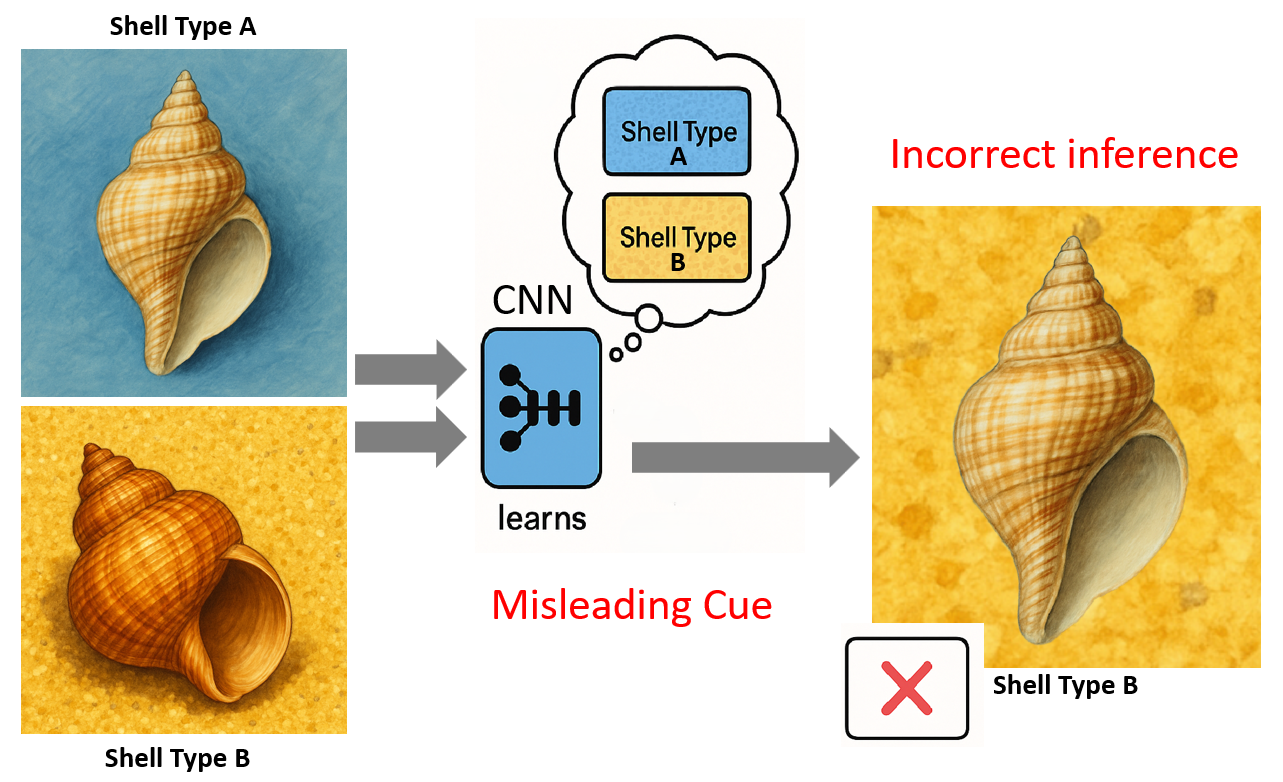

The domain of training images can bias a CNN towards different feature representations. Studio images (e.g. shells photographed against plain backgrounds) tend to emphasize the shell’s intrinsic features – shape geometry, color pattern, texture – because distracting context is minimized. In contrast, field images (e.g. shells in sand or underwater) contain varied backgrounds, lighting, and other objects, introducing extrinsic context that a CNN may also learn. If not carefully managed, a network can latch onto spurious contextual cues correlated with the target class rather than the shell itself. For example, a species often photographed on coral reef might be recognized by the CNN partly via the reef background instead of shell morphology. Indeed, studies have noted that models trained on biased datasets can unfairly use background or contextual objects for prediction [3].

Studio Imagery (Controlled Environments)

Studio imagery is defined by its consistency: controlled illumination, homogenous backgrounds (often simple and non-distracting), standardized specimen orientation to present key diagnostic features, and typically high resolution. These efforts aim to minimize "nuisance" variables that could interfere with the analysis of the shell itself [1, 4].

This controlled environment has a profound impact on the features a CNN learns. With minimal distracting background signals and consistent lighting, the network is encouraged to focus on the intrinsic morphological characteristics of the seashell. These can include fine-scale textures, subtle patterns of shell whorls, the precise shape of the aperture, or intricate sculptural details. Such feature learning mirrors successes in other domains, like medical image analysis, where controlled image acquisition allows CNNs to learn detailed diagnostic features. The potential for high-fidelity capture of these minute details is particularly attractive for studies aiming to distinguish subtle inter- and intra-specific variations, which are the cornerstone of many morphometric analyses [1, 5].

However, the very control that facilitates this focus can also be a pitfall. There is a considerable risk that the CNN may learn "shortcut" features associated with the controlled setup itself. These shortcuts could be subtle but consistent lighting artifacts, minor textural variations in the "uniform" background, or even systematic noise patterns from the camera sensor, especially if these elements are inadvertently correlated with class labels (e.g., if all specimens of Species A are photographed on a slightly different background cloth than Species B). Research by Erukude et al. [4] demonstrates that even visually controlled datasets can encode systematic, acquisition-specific signals that are exploitable by deep neural networks, highlighting that dataset bias can persist despite standardized imaging protocols.. If a CNN consistently observes seashells of a particular species only under a specific lighting angle or against a particular backdrop, it may erroneously incorporate these environmental constants into its feature representation for that species. This represents a form of overfitting to the acquisition context, rather than learning the universal morphology of the seashell. Consequently, the features learned might exhibit poor robustness to even minor deviations from the strict studio protocol if the training data does not encompass a sufficient range of controlled variations [4, 6]

Field Imagery (Uncontrolled Environments)

Field imagery of seashells is characterized by its inherent variability and complexity. Illumination is subject to natural light conditions, including direct sun, shadows, and, if underwater, turbidity and light attenuation. [7] Backgrounds are typically complex and diverse, featuring elements like sand, rocks, seaweed, detritus, and other organisms. Shells may be partially occluded by sediment or epibionts, present in non-standardized poses, and image quality can vary due to motion, focus issues, or environmental conditions.

Training a CNN on such imagery can encourage the learning of features that are more tolerant to nuisance variation (lighting, pose, partial occlusion), provided that class–context correlations are controlled and that each species is observed across diverse backgrounds. [1]. The network must learn to identify the seashell despite changes in lighting, partial views, or distracting surroundings. This often leads to the development of features that are more "holistic" or context-aware. For instance, a CNN might learn to associate certain shell shapes or patterns with typical environmental cues if these are consistently correlated with species presence in the training data [8]. The network might learn, for example, that a particular elongated shell form is frequently found partially buried in coarse sand, incorporating this contextual information into its decision-making process.

However, this learning environment also carries a high risk of "shortcut learning". If certain background elements or contextual cues are easier for the CNN to learn and are spuriously correlated with specific seashell species, the network may rely on these shortcuts rather than on the shell's intrinsic morphology. For example, if a particular species is predominantly photographed on dark volcanic rock, the CNN might learn "dark volcanic rock" as a primary identifier. While this might lead to high accuracy on test sets with similar contextual biases, the model would fail if the species is encountered on a different substrate. This is a critical concern, as fine-grained morphological details, which are often less salient or consistently visible in variable field conditions, might be "washed out" or deemed less important by the CNN if they are less discriminative than these contextual shortcuts. Increased image variability, as often found in field data, can reduce the consistency with which fine-grained morphological cues are visible, thereby limiting their reliability as discriminative features during training [6, 9].

Figure 2: Conceptual illustration of shortcut learning. (a) CNN trained on biased data where Shell Type A is always on blue background and Shell Type B on yellow gravel. (b) The CNN erroneously learns the background as a primary distinguishing feature. (c) Misclassification occurs when Shell Type A is presented in a novel context (yellow gravel), as the model relies on the learned spurious background correlation.

The inherent nature of field imagery trains the CNN for invariance to a broad range of nuisance variables. This is advantageous for robust classification in dynamic environments. However, it can become detrimental if these nuisance variables covary with subtle morphological traits that are essential for fine-grained variability studies. For example, if a delicate sculptural ridge on a shell is frequently obscured by sand or biofouling in field images of a particular species, the CNN might learn to de-prioritize that feature for identification, relying instead on other, more consistently visible characteristics. This learned invariance to occlusion is beneficial for general field identification but problematic if that specific ridge is a key morphometric marker.

The complexity of field backgrounds can also compel CNNs to implicitly learn more sophisticated object detection and segmentation capabilities, even if not explicitly trained for these tasks. To successfully identify a camouflaged seashell, the network must learn to effectively distinguish it from a visually complex background. This process could, in principle, refine its understanding of the seashell's boundary and overall shape. However, there is a concurrent risk: if certain background textures or elements are consistently present with a particular species (e.g., a specific type of encrusting algae), the CNN might erroneously incorporate these background features into the seashell's learned feature representation. This potential for the background to "bleed into" the object's features is a well-recognized challenge in computer vision, particularly when dealing with contextual cues and strong background influences [8, 10].

Table 1: Comparative Analysis of Studio vs. Field Imagery for CNN-based Seashell Research.

| Characteristic | Studio Imagery | Field Imagery |

|---|---|---|

| Image Acquisition | ||

| Lighting | Controlled, consistent, optimal illumination | Variable, natural (sun, shade, overcast), shadows, glare, underwater attenuation, turbidity effects |

| Background | Uniform, plain, artificial, designed to be non-distracting | Complex, natural, variable (sand, rocks, algae, mud, water surface, other organisms, debris) |

| Specimen Pose/Orientation | Standardized, often optimal for showcasing diagnostic features | Variable, often non-optimal, partial views due to burial or natural positioning |

| Occlusion | Minimal/None, specimen is cleaned and isolated | Common (sediment, debris, epibionts, other organisms, self-occlusion due to pose) |

| Image Quality | Generally high, consistent resolution, good focus, minimal noise | Variable, potential for blur (motion, focus), noise (low light, sensor), color cast, compression artifacts |

| Typical CNN Learned Features | ||

| Object-Intrinsic Detail | High potential for learning fine morphological features (texture, sculpture, fine lines) | Potentially lower emphasis on finest details if they are often obscured, variable, or less discriminative than context |

| Contextual Features | Minimal intended; risk of learning studio artifacts (lighting patterns, background traces) | High potential for learning features related to natural habitat, substrate, or co-occurring elements |

| Robustness to Variation | Low (Often low out-of-domain robustness unless explicit augmentation or domain randomization is applied) | High (Potentially higher robustness to nuisance variation (lighting, pose, occlusion), but sensitive to context bias if class–background correlations are present) |

| Risk of Shortcut Learning | To subtle, consistent studio artifacts or imaging protocol elements | To common background elements, contextual cues, or consistent environmental conditions correlated with species |

| Suitability for Species Variability Studies (Morphometrics) | ||

| Pros | Potentially lower emphasis on the finest morphological details if these are inconsistently visible or less discriminative than contextual cues. | Captures natural morphological variation in situ, avoiding preservation artifacts if features can be robustly extracted. |

| Cons | Risk of model overfitting to studio setup; features may not be truly intrinsic if influenced by artifacts; may not represent natural variation. | Background noise, pose, lighting, and occlusions can obscure, distort, or impede the precise extraction of fine-grained features essential for morphometrics. |

| Suitability for Field Classification | ||

| Pros | Can provide clean, well-labeled training data if domain adaptation to field is effective. | Essential for training robust, generalizable models for in-situ identification; learns features relevant to field conditions. |

| Cons | Poor direct generalization to field conditions due to domain shift unless domain adaptation, augmentation, or mixed-domain training is applied. | May learn spurious correlations if dataset is biased; fine distinguishing details might be ignored in favor of robust cues. |

| Key Challenges | ||

| Ensuring learned features are truly object-intrinsic and not artifacts; achieving generalization beyond the specific studio setup. | Disentangling the object from complex backgrounds; avoiding shortcut learning; ensuring sufficient detail is learned for reliable identification across varied conditions. | |

| (Both): Dataset bias (species representation, geographic coverage, specimen condition); domain shift if applied to a significantly different imaging modality or setup. | ||

Use Case 1: Morphological Research (Shell Shape and Structure)

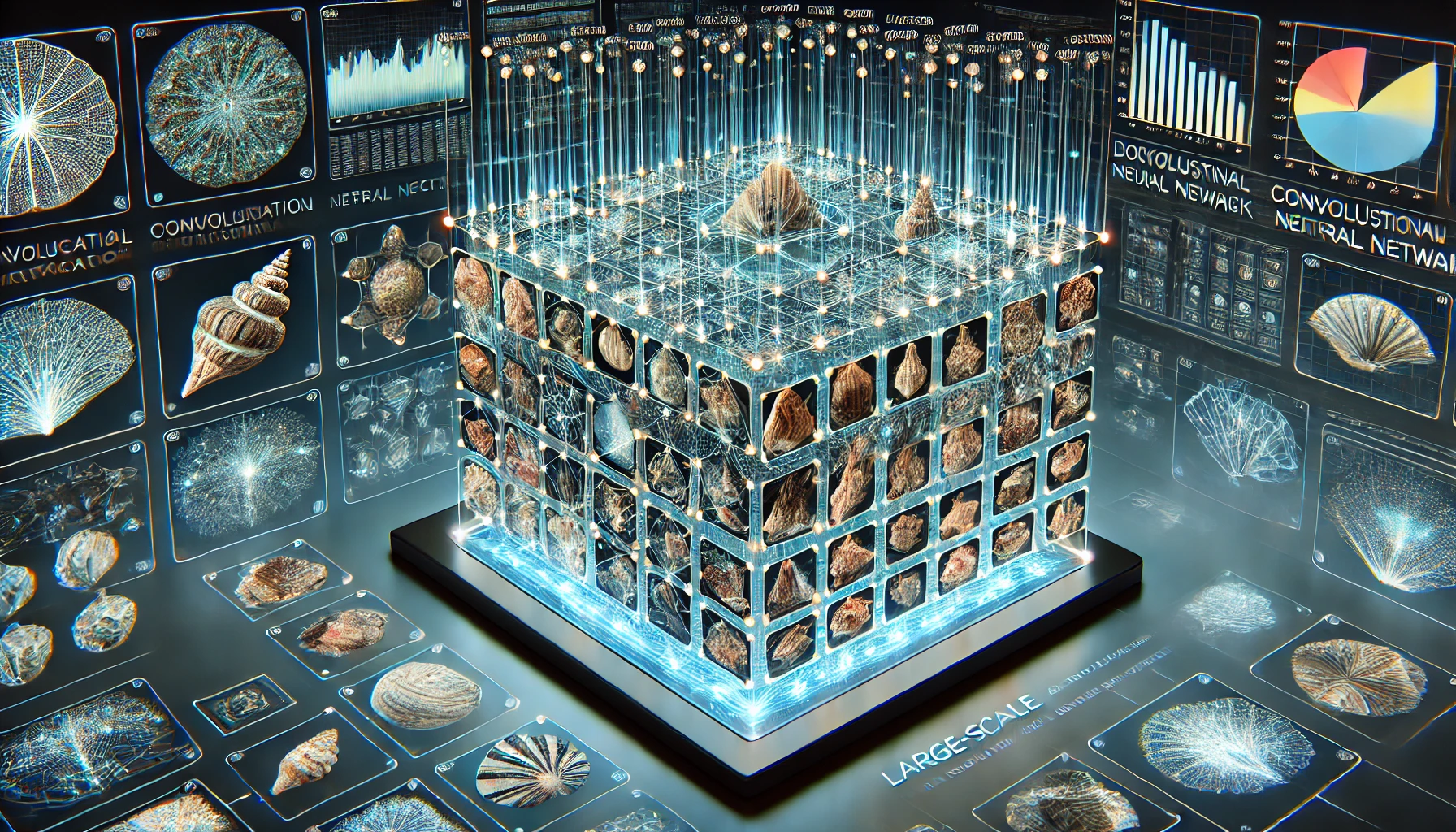

Researchers studying shell morphology (such as shape variation within/between species, or geometric morphometrics) and species variability typically require the model to focus on intrinsic shell characteristics. Here, controlled studio images are highly beneficial. By photographing shells in standardized orientations and backgrounds, one ensures that any features the CNN learns (or any shape descriptors it extracts) relate to the shell’s form and pattern rather than environmental noise. For instance, Zhang et al. (2019) [11] compiled a dataset of 59,244 shell images representing 7,894 species, with each shell specimen photographed in a consistent manner – two views (frontal and lateral) per shell on a uniform background. This standardization captures the shell’s color, shape, and texture features while eliminating background variation. Models trained on such data can more easily learn morphometric features like spire height, aperture shape, ribbing, or coloration patterns that are intrinsic to the shell. In practice, this has enabled classification and analysis of fine-grained shell differences. For example, CNNs trained on controlled images have achieved expert-level discrimination among visually similar shells by focusing on subtle pattern differences [12]. Qasmi et al. (2024) [13] used ~47,600 studio-like images of Conus shells (cone snails) and attained ~95.8% identification accuracy by extracting deep features with VGG16 and classifying with Random Forest [13]. Such high performance suggests the model effectively learned the shells’ intrinsic patterns (color banding, geometric outlines), which is feasible largely because the training images were homogeneous and free of confounding backgrounds.

In morphological research, it’s often desirable to quantify shape or compare forms across specimens. Traditional approaches use geometric morphometrics (landmarks or outlines) to capture shape, but CNNs on images can now play a similar role [14]. However, to trust that a CNN is analyzing shape and not something irrelevant, images should be as clean as possible. Researchers frequently go as far as segmenting the shell from any background in images before analysis. In one study on bivalve shells, Concepcion et al. (2023) [15] applied a lazy snapping segmentation to isolate each cockle shell in the photo, then extracted shape and texture features for geographic traceability analysis [15]. By removing the background and focusing on the shell’s outline and surface, they derived features like shell color indices (R, a*, b* values), surface entropy (texture), and shell diameter [15] – all intrinsic properties. This approach highlights that for morphology-centric use, studio images or segmented images are preferred. A controlled image set ensures the CNN (or any feature extractor) isn’t distracted by extrinsic elements and instead learns a representation of shell form that correlates with biological variation. Indeed, when images are inconsistent or noisy, morphological signals can be drowned out. As a caution, a CNN trained on field images for morphometric analysis may inadvertently incorporate environmental differences (such as substrate color or background texture) into its learned representation, potentially confounding genuine shape variation. For studies focused on shell shape, size, or structural differences, training on studio images — or more generally, on background-controlled or segmented imagery — is therefore recommended. Such data increase the likelihood that learned features emphasize intrinsic morphological characteristics rather than contextual cues. Additionally, consistent studio imagery facilitates the interpretation of CNN attention maps, as saliency methods are more likely to highlight shell-intrinsic structures (e.g., ridges, apertures, or surface sculpture) rather than background regions.

This use case primarily concerns controlled morphometric analysis under standardized imaging conditions. For tasks aimed at species delimitation or the characterization of natural phenotypic variability, representations trained on a combination of studio and field imagery are more appropriate.

Use Case 2: Species Classification

Automated species identification from shell images is a common application of CNNs in taxonomy, conservation, and citizen science. The optimal training domain for this use case depends on deployment: Will the classifier be applied to curated museum images or to photos taken in the wild by non-experts? If the goal is an identification tool for researchers or collections (where images are studio-quality), a CNN trained on studio images can perform extremely well on similar controlled images. As noted above, large benchmarks of studio images (e.g. Zhang et al.’s 59k-image dataset) have demonstrated high accuracy in shell species classification using features of color, shape, and texture [11, 12, 13]. These models effectively learn the intrinsic visual signatures that differentiate species. For example, subtle differences in the patterning of two cowrie species or the shape of the aperture in cone snails can be picked up by a CNN when extraneous variation is minimized. In taxonomic research settings, this is valuable for identifying species from photographs of museum specimens or digital collections.

If the classifier will be used on field images (e.g. a mobile app for beachcombers or analyzing ecological survey photos), training exclusively on pristine studio images can lead to poor real-world performance. This is due to domain shift – the statistical differences between the training set (lab photos) and target set (field photos). Models trained on uniform backgrounds often fail to generalize to cluttered backgrounds, different lighting, or partial occlusions. In such cases, it is crucial to either include field images in the training mix or apply domain adaptation techniques. A recent large-scale effort by Valverde and Solano (2025) [16] illustrates this approach: to classify confiscated seashells by their ecosystem of origin, they built a dataset of ~19,000 images drawn from diverse sources, including online shell catalogues (e.g. Conchology Inc.) and citizen science platforms like iNaturalist [16]. By curating images of each species from both controlled sources and in-situ photographs, they ensured that the CNN saw “diverse positions, backgrounds, and environmental conditions” during training to enhance robustness. In other words, the model was exposed to shells on various substrates, under different lighting, in close-up as well as in habitat, etc., rather than only uniform studio images. This approach led to a classifier that could generalize better to real confiscated shells, which might be photographed under suboptimal conditions. The importance of mixing domains is also echoed in the insect classification realm: Jain et al. (2024) [17] created the AMI benchmark with museum images and camera-trap field images for insect species, specifically to test models’ out-of-distribution performance under wild conditions [17]. Their findings showed that models trained on the curated images suffered performance drops on field photos, but incorporating data augmentations or adaptation significantly improved the field accuracy. In fact, simple domain adaptation tricks – such as a mixed-resolution augmentation simulating lower-quality field imagery – boosted classification accuracy on wild insect photos by 4–7% compared to no adaptation [17]. This demonstrates that even for mollusk shells, a classifier intended for in-situ use should not rely solely on high-quality studio training data.

Feature learning implications: A CNN trained on field images will naturally learn to handle background variation, but as mentioned, it may also pick up extrinsic contextual features. For example, if in the training set Species A images often show a rocky tidepool background and Species B images show sandy beach, the CNN might erroneously use “rock” vs “sand” as a cue for classification. This can be problematic if those species appear in different contexts in new images. By contrast, a CNN trained on pure studio images learns to base its decision only on the shell’s morphology (since every image might have the same neutral backdrop). To mitigate context bias in species classifiers, researchers can employ a few strategies:

- Balanced domain training: Include a variety of backgrounds for each species in training so that no single background is uniquely associated with a given class. Valverde et al. [16] did this by sourcing from multiple platforms. In effect, the CNN sees each species in multiple settings, forcing it to focus on the shell itself.

- Image preprocessing: Use segmentation or object detection to first isolate the shell from the background in field images, then classify the cropped shell. This two-stage approach ensures the classifier mostly sees shell pixels. It was effectively used in the cockle shell study, where segmentation preceded classification [15]

- Data augmentation: Randomly augment background, lighting, and noise during training. This domain randomization makes the model invariant to those factors. For instance, one could overlay studio shell images onto random background scenes to synthetically “field-ize” the training data. Blair et al. (2025) [18] showed that supplementing controlled images with synthetic variants and fine-tuning greatly improved generalization: a model trained on perfect museum specimen images achieved only ~55% accuracy on real-world photos, but after applying domain adaptation techniques (including fine-tuning on a small set of real images and using domain alignment loss), accuracy jumped to 95% [18]. This remarkable gain underscores the value of adaptation – the model’s features shifted from over-relying on idealized traits to capturing those that persist in real photographs.

In summary, for species classification, the training domain should match the intended usage. Use studio images for applications on curated photos (this yields models that excel at morphological discrimination), but include field images (or simulate them) for models that need to work on natural photographs. Whenever a domain gap exists, consider domain adaptation or fine-tuning to bridge the difference.

Use Case 3: Detection and Classification of Shells in the field

Detecting shells in situ – for example, finding all shells in a beach scene or undersea photo and identifying them – is a challenging task that combines object detection/segmentation with classification. In this scenario, training on field images is almost indispensable. The model (e.g. a region-based CNN or a segmentation network) must learn to distinguish shell pixels from background clutter. Studio images alone are insufficient for detection, since they contain no clutter or occlusion; a detector trained only on isolated shell photos would likely misidentify rocks, coral pieces, or sand patterns as shells (false positives) or miss partially buried shells. Therefore, datasets for shell detection need to reflect realistic conditions: shells of varying sizes, possibly partially covered by sand or debris, different camera angles, and diverse backgrounds.

One case study comes from materials engineering: Liu et al. (2023) [19] needed to identify tiny shell fragments mixed in sea sand (as shells can weaken concrete made with sea sand). They captured 960 high-resolution photos of actual sea sand spread out with shell pieces, yielding 2,199 labeled shell instances for segmentation [19]. By training state-of-the-art segmentation CNNs (PointRend, DeepLabv3+) on this field-like dataset, they achieved high accuracy in isolating shells of different shapes, sizes, and textures. Notably, they introduced a controlled imaging method to ensure shells were visible (avoiding complete overlap) and had “well-defined boundaries,” which significantly improved the model’s performance (PointRend achieved the highest Intersection-over-Union and pixel accuracy). This underscores a subtle point: even when using field images, one can control certain conditions (e.g. photographing in a way that shells aren’t too obscured) to make the learning task feasible. The CNN in this case learned robust features to distinguish shell material from sand based on color and texture differences, despite the noisy background. Importantly, because the training data was drawn from real mixed scenes, the model’s learned features included those necessary to handle occlusion and noise – for instance, recognizing the curved edge of a shell even if part of it is buried, or differentiating the smooth lustre of a shell from matte pebbles. Such features are crucial for detection in the field.

For broader wildlife monitoring (e.g. surveying molluscs in intertidal zone), the pattern holds: a detector must be trained (or at least fine-tuned) on representative field imagery. If one only has studio images of shells, an approach is to use synthetic data generation: create composite images by inserting shell images onto background scenes, possibly with random rotations, scales, and occlusion. This can provide an initial training set for detection. But again, without adaptation, a gap remains – synthetic composites might not capture all real-world complexity (lighting gradients, true 3D occlusions, etc.). Domain adaptation methods (e.g. adversarial training to align feature distributions from synthetic and real domains) could be applied. In one aquaculture study, unsupervised domain adaptation was used to improve fish detection across different farm environments [20], suggesting similar techniques would help shell detection across lab vs field domains.

In practice, a strong approach for in-situ shell detection/classification is a hybrid pipeline: use a CNN to first detect or segment shell regions in the field image, then pass those regions to a classifier (which could even be trained on studio images). This way, detection is learned on field data, but classification leverages a model trained on high-quality images focusing on morphology. This two-step system ensures the final identification uses shell-intrinsic features, while the detection stage handles the extrinsic variability. For example, one might train a YOLO or Mask R-CNN on a moderate set of annotated images to localize shells, and separately train a classifier on a big curated shell image dataset. When deployed, the detector finds candidate shells, and the classifier (viewing a cropped shell) identifies the species. Such an approach leverages the best of both domains: the robustness of field-trained detectors and the accuracy of morphology-trained classifiers.

Bridging the Divide: Strategies for Optimal Feature Learning in Seashell Research

Given the distinct advantages and disadvantages of studio and field imagery for different research goals, a crucial question arises: how can researchers optimize CNN feature learning to best serve their specific needs in seashell research? Several strategies, ranging from data manipulation to advanced model architectures and training paradigms, can help bridge the divide between the controlled studio environment and the unpredictable field, or enhance the quality of features learned within each.

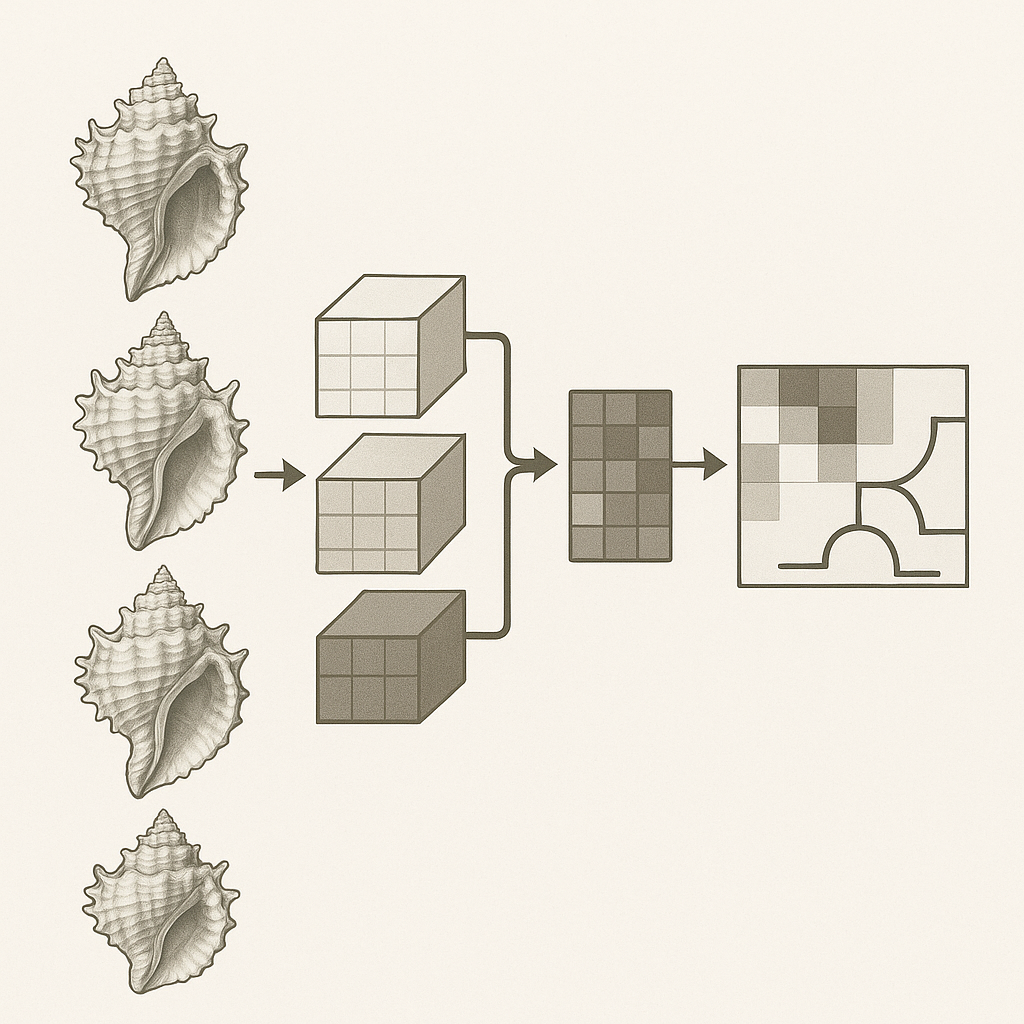

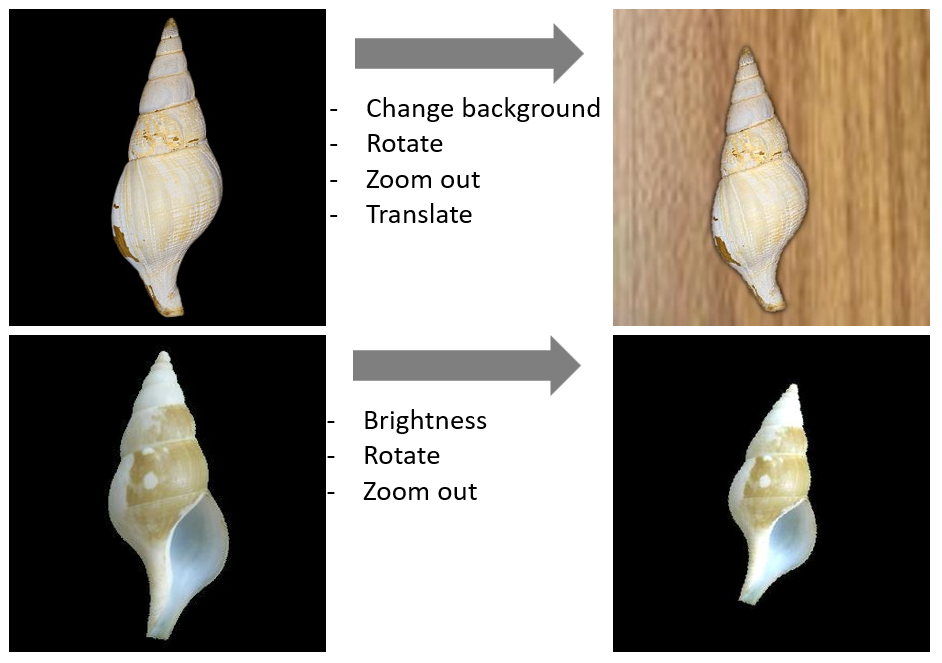

Data Augmentation: This is a foundational technique for improving model robustness and generalization, particularly when field data is limited or to simulate a wider range of conditions than present in the available dataset. For seashell research, augmentations could include:

- Geometric transformations: rotations, flips, scaling, cropping

- Photometric transformations: adjustments to brightness, contrast, saturation, hue

- Simulating environmental conditions: adding synthetic backgrounds (various types of sand, pebbles, rocks), partial occlusions (simulated seaweed, debris), color cast, and lighting variations, similar to the turbidity simulation performed for fish classification

- Elastic deformations to simulate minor shape variations. One study reported a 7.2% accuracy improvement in fish classification directly attributable to data augmentation. Another study emphasizes the importance of data augmentation with realistic distortions for improving object detection performance in uncontrolled environments. However, a critical consideration is that overly aggressive or unrealistic augmentations might inadvertently obscure very fine morphological details essential for species variability studies. The key is to employ targeted and realistic augmentations that expand the training distribution in a meaningful way without destroying crucial signal [1]

Figure 3: Examples of data augmentation techniques to diversify studio imagery. (Left) Original studio image. (Top right) Shell placed on a synthetic natural background, zoomed out and slightly rotated. (Bottom right) Shell zoomed out, rotated and changed brightness

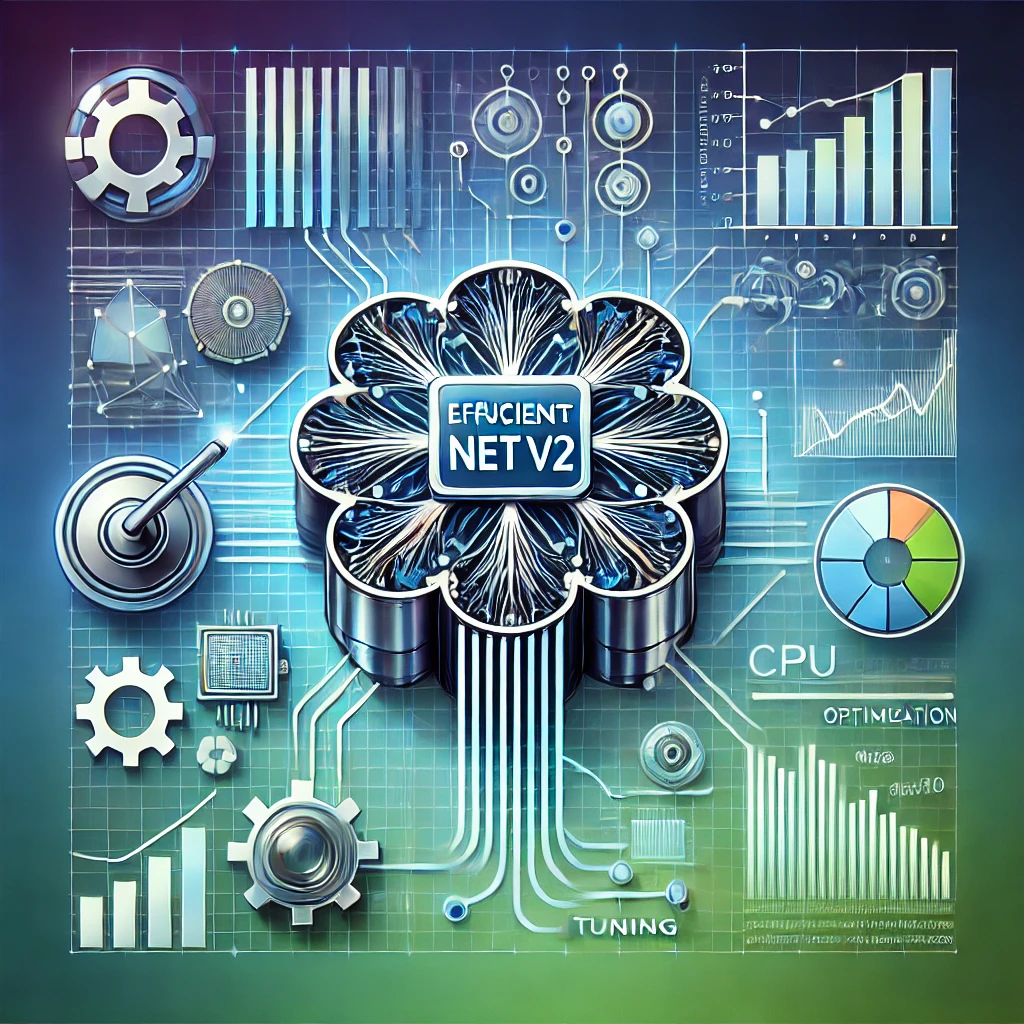

Transfer Learning and Domain Adaptation (DA): These techniques are vital for leveraging knowledge from existing datasets and for mitigating the negative effects of domain shift.

- Transfer Learning: Involves using models pre-trained on large, general-purpose image datasets (e.g., ImageNet) and then fine-tuning them on more specific seashell datasets (either studio or field). This approach can significantly boost performance, accelerate training, and reduce the need for massive, task-specific labeled datasets. For instance, a 10.2% improvement was observed in fish classification when using transfer learning compared to training a custom CNN from scratch. The survey by Pan & Yang (2010) provides a foundational overview of transfer learning principles

- Domain Adaptation (DA): This is particularly essential for bridging the gap between studio (source domain) and field (target domain) imagery, or even between different types of field environments (e.g., intertidal vs. deep sea). DA methods aim to learn features that are invariant across domains or to explicitly map features from the source domain to the target domain.

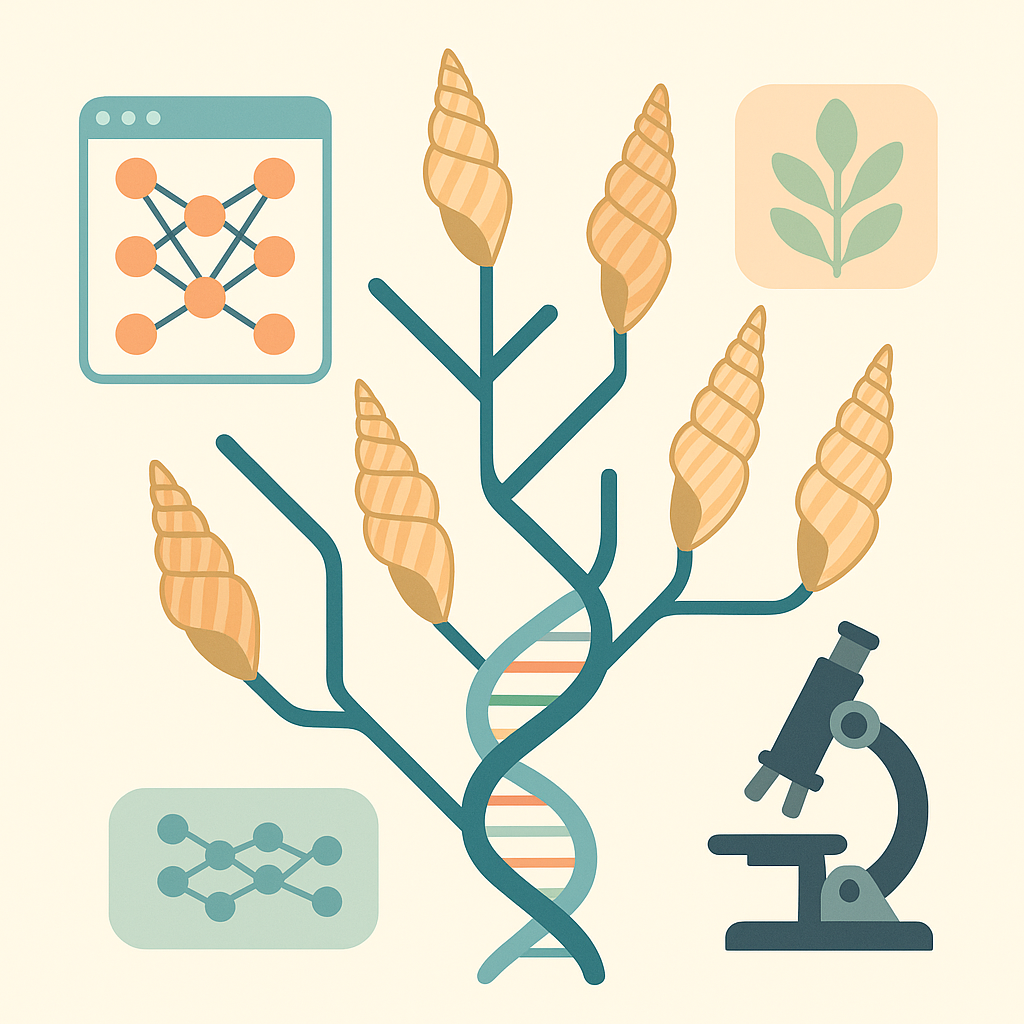

Feature Disentanglement: This class of techniques aims to train models that can separate the learned features into distinct, interpretable components.

In the context of seashell research, this could mean disentangling object-intrinsic properties (e.g., the shell's true shape, color, and texture) from

contextual factors (e.g., background elements, lighting conditions, pose). Such capabilities would be highly valuable for deriving accurate morphometric

data from field images, where isolating the shell's true morphology from the confounding influences of its environment is a primary challenge. For example,

research in video analysis has explored methods like MOBNET for disentangling motion, object, and background in complex scenes [22];

while developed for video,

the core concept of separating an object of interest from its background is directly applicable. Furthermore, in the context of phylogenetics,

the development of disentangled networks has been suggested as a way to better interpret deep learning-derived morphological traits [21].

True feature

disentanglement implies that the model gains some understanding of the causal factors of variation in the image data. Achieving this robustly with current

CNN architectures often requires specialized network designs and carefully formulated loss functions, and it remains an active and challenging area of research.

For instance, developing models that can provably disentangle the subtle textural patterns of a weathered shell from the texture of its sandy substrate, or separate

true shell color from lighting-induced color casts in field images, represents a significant hurdle. Future work could explore adapting existing disentanglement

frameworks or developing novel architectures specifically tailored to the nuances of biological specimens like mollusc shells, potentially by incorporating prior

knowledge about shell growth patterns or material properties into the learning process.

Understanding and Trusting Model Decisions: The Role of Explainable AI (XAI)

As CNNs become more powerful and are applied to increasingly complex scientific questions, the "black box" nature of these models—where the internal decision-making processes are not inherently transparent—becomes a significant concern. Explainable AI (XAI) encompasses a suite of techniques designed to provide insights into how these models arrive at their predictions, fostering trust, enabling debugging, and potentially leading to new scientific discoveries [23]

One prominent XAI technique is Gradient-weighted Class Activation Mapping (Grad-CAM). Grad-CAM generates heatmaps that visually highlight the regions in an input image that were most influential in a CNN's decision-making process for a particular class. For seashell research, this is invaluable. It allows researchers to ascertain whether the CNN is genuinely focusing on the seashell itself and its relevant morphological features, or if its predictions are being driven by irrelevant background elements, contextual cues, or even imaging artifacts. For example, Grad-CAM was used to confirm that a fish classification model was indeed focusing on meaningful features of the fish rather than background noise [1]. Another example, is a model for classification of a gastropod genus, where the shell features that are used to classify the Harpa species were identified [24]

.png)

Fig. 4: Grad-CAM visualizations for interpreting CNN decisions. (Left) Average heatmap for Harpa major. (Right) Overlay of the heatmap on an original image of the shell.

Identifying Shortcut Learning and Dataset Biases: XAI tools, including Grad-CAM, can be instrumental in uncovering instances of shortcut learning, where a model relies on spurious correlations within the training data rather than learning true causal relationships. If a particular background type consistently co-occurs with a certain seashell species in the training set, Grad-CAM might reveal that the model is primarily activating on the background, not the shell. This was exemplified in cases where Grad-CAM showed misclassifications occurring because the model focused on background elements rather than the target objects. Identifying such biases is crucial for debugging models and ensuring that they are learning generalizable, biologically relevant features, rather than merely exploiting idiosyncrasies of the dataset [25].

For scientific applications such as species variability studies or ecological monitoring using field classification, understanding why a model makes a particular prediction is vital for establishing trust in its outputs and for validating its scientific utility. XAI techniques help bridge the gap between a CNN's complex internal workings and human understanding, making the decision process more interpretable.

The insights gained from XAI are not merely diagnostic; they can actively inform the iterative process of data collection and model retraining. If Grad-CAM consistently reveals that a model is focusing on a particular irrelevant background feature when classifying a certain species, this signals a clear bias in the training dataset. This information can then guide efforts to address this bias, for example, by collecting more images of that species with diverse backgrounds, by augmenting the existing data to break the spurious correlation, or even by guiding modifications to the model architecture (e.g., incorporating attention mechanisms designed to better focus on the object of interest).

However, it is important to acknowledge that the "explanations" provided by current XAI methods like Grad-CAM are typically post-hoc interpretations based on correlations. They effectively show what parts of the image the model "looked at" (i.e., which regions had high gradients for the predicted class), but they do not always elucidate the deeper reasoning behind that attention, nor do they guarantee that this pattern of attention is robust across all possible input variations. Grad-CAM might show focus on the correct part of a seashell, but this does not fully preclude the possibility that the model has also learned subtle, spurious correlations that are harder to visualize, or that the highlighted region, while correlated, is not robustly discriminative across all unseen contexts.

Consequently, ongoing research into more advanced XAI techniques that move beyond correlational heatmaps towards explanations that reflect causal relationships or provide formal robustness guarantees for interpretations will be vital for increasing the reliability and adoption of CNNs in rigorous scientific inquiry.

Conclusion

In conclusion, the choice of studio vs. field images in CNN training has a profound effect on what the model learns. Studio images tend to produce models that emphasize intrinsic shell morphology, making them well suited for tasks requiring fine morphometric distinctions and controlled classification. Field images (and models trained on them) are necessary for robust real-world performance, teaching the CNN to handle noise, but care must be taken to prevent extrinsic environmental context from dominating the decisions. The best solution often lies in a balanced approach: utilize controlled images to hone in on shell features, but also incorporate the variability of natural scenes through field data or augmentation so that the model can confidently apply its knowledge outside the lab. By following best practices in dataset preparation and domain adaptation, CNNs can more reliably reflect biologically meaningful shell characteristics and perform consistently across controlled and natural imaging conditions.

Further research could focus on systematically evaluating the sensitivity of CNNs to specific, quantifiable environmental variables present in field imagery (e.g., levels of sediment cover, degrees of biofouling, specific ranges of light intensity/angle). Developing benchmark datasets where these variables are controlled or annotated could pave the way for models that not only are robust to such variations but can also explicitly account for or even correct their influence when extracting morphological data.

References

- [1] A Mohammadisabet et al. CNN-Based Optimization for Fish Species Classification: Tackling Environmental Variability, Class Imbalance, and Real-Time Constraints. Information 16, 154 (2025)

- [2] Reeb RA et al. Using Convolutional Neural Networks to Efficiently Extract Immense Phenological Data From Community Science Images.. Front Plant Sci. 12:787407 (2022)

- [3] K K Singh et al. Don't Judge an Object by Its Context: Learning to Overcome Contextual Bias. arXiv:2001.03152 [cs.CV] (2020)

- [4] S T Erukude et al. Identifying Bias in Deep Neural Networks Using Image Transforms. arXiv:2412.13079 [cs.CV] (2024)

- [5] Zimmermann M et al. Deep learning-based molecular morphometrics for kidney biopsies.. JCI Insight. 6(7):e144779 (2021)

- [6] N Murali et al. Shortcut Learning Through the Lens of Early Training Dynamics. arXiv:2302.09344 [cs.LG] (2023)

- [7] J L Poveda Cuellar et al. Deep Learning on field photography reveals the morphometric diversity of Colombian Freshwater Fish. PREPRINT (Version 1) available at Research Square (2025)

- [8] X Wang & Z Zhu. Context understanding in computer vision: A survey. Computer Vision and Image Understanding, Volume 229, 103646 (2023)

- [9] Geirhos, R. et al. Shortcut learning in deep neural networks. Nat Mach Intell 2, 665–673 (2020)

- [10] Xiao, J. et al. Multi-Scale Object Detection with the Pixel Attention Mechanism in a Complex Background.. Remote Sensing, 14(16), 3969. (2022)

- [11] Zhang, Q., Zhou, J., He, J. et al. A shell dataset, for shell features extraction and recognition.. Nature, Sci Data 6, 226 (2019)

- [12] Ph. Kerremans. A Large-Scale Convolutional Neural Network for Fine-Grained Conus Shell Identification. Identifyshell.org (2025)

- [13] N. Qasmi et al. Recognition of Conus species using a combined approach of supervised learning and deep learning-based feature extraction . PLoS ONE 19(12):e0313329 (2024)

- [14] Tsutsumi, M., et al. A deep learning approach for morphological feature extraction based on variational auto-encoder: an application to mandible shape.. npj Syst Biol Appl 9, 30 (2023)

- [15] Concepción, R. et al. BivalveNet: A hybrid deep neural network for common cockle geographical traceability based on shell image analysis. Ecological Informatics, 78, 102344 (2023)

- [16] A Valverde & L Solano. Back Home: A Machine Learning Approach to Seashell Classification and Ecosystem Restoration. arXiv:2501.04873 [cs.CV] (2025)

- [17] Aditya Jain et al. Insect Identification in the Wild: The AMI Dataset. arXiv:2406.12452 [cs.CV] (2024)

- [18] J Blair et al. Leveraging synthetic data produced from museum specimens to train adaptable species classification models. Preprint available at researchgate.net

- [19] T Liu et al. Identification and analysis of seashells in sea sand using computer vision and machine learning. Case Studies in Construction Materials, Volume 18, e02121 (2023)

- [20] Tengyun Zhao et al. Unsupervised adversarial domain adaptation based on interpolation image for fish detection in aquaculture. Computers and Electronics in Agriculture, Volume 198, 107004, (2022)

- [21] R Hunt et al. Integrating Deep Learning Derived Morphological Traits and Molecular Data for Total-Evidence Phylogenetics: Lessons from Digitized Collections. Systematic Biology, syae072 (2025)

- [22] Xunyu Lin et al. Disentangling Motion, Foreground and Background Features in Videos. arXiv:1707.04092 [cs.CV] (2017)

- [23] L Moreau AI Explainability with Grad-CAM: Visualizing Neural Network Decisions. edge impulse, (2025)

- [24] Ph. Kerremans. Unveiling Morphological Insights in Biological Imagery Through CNN Interpretability Techniques. Identifyshell.org (2025)

- [25] Kamakshi, V. et al. Explainable Image Classification: The Journey So Far and the Road Ahead.. AI, 4(3), 620-651 (2023)